TL;DR

- Your PE owners are asking: “Which AI initiatives actually moved EBITDA?” If you can’t answer with numbers, your next budget gets cut.

- 95% of companies fail to prove AI ROI because they measure adoption, not impact. You need attribution rigor before you need more tools.

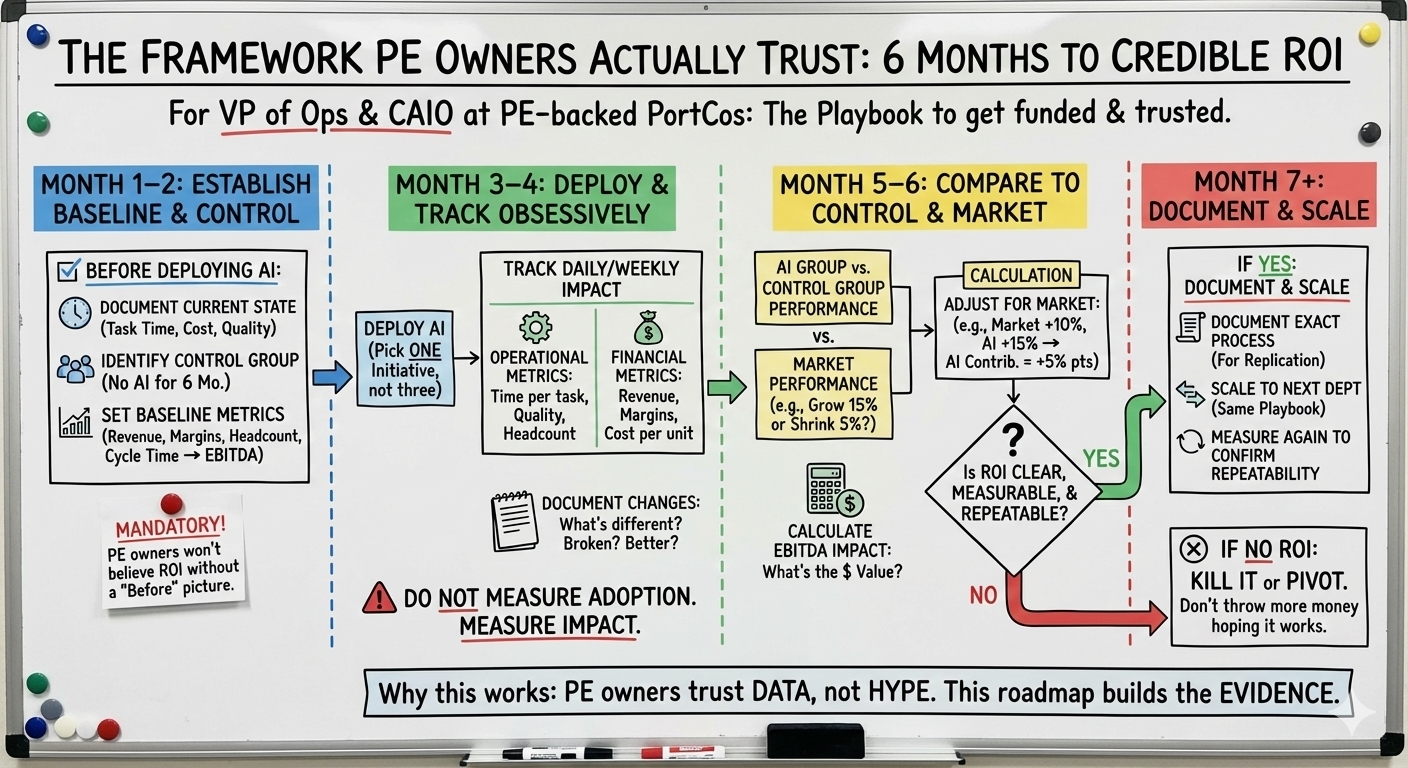

- The 6-month playbook: baseline → deploy → measure against control → scale. Follow this, and you’ll have proof your PE owners can’t ignore.

Your PE firm just approved the budget for three AI initiatives. The kickoff meeting was last week. Your CEO is excited. Your CFO is skeptical. And you are stuck in the middle. In 6 months, your PE owners are going to ask: “What did this cost, and what did we get back in EBITDA?”

If your answer is “We launched three tools, adoption is up 40%, and everyone’s using it,” you’ve already lost. That’s not an answer. That’s an excuse. A vanity metric at best.

What actually matters to PE?

- Did EBITDA expand?

- Can you prove it was AI, not market luck?

- Is it scalable?

Here’s how to measure so the answer is yes.

Why Measurement Matters for You (And Your PE Owners)

Your PE firm didn’t buy this company to run experiments. They have a 3–5 year hold period and a specific EBITDA target at exit. Every dollar you spend on AI either contributes to hitting that target or it doesn’t. When you can prove AI moved EBITDA, three things happen:

- You get the next tranche of budget (PE firms fund what works)

- You build operational credibility (PE owners trust your decision-making)

- You create a playbook for the next AI initiative (scaling becomes possible)

Companies that rigorously measure AI ROI see 10% higher returns on investment than those that don’t.

But 95% of AI pilots fail to show measurable ROI. Not because AI doesn’t work. Because measurement is hard and nobody wants to admit “we can’t prove the value.”

Instead, companies measure what’s easy:

- Tool adoption rates

- Hours spent using the system

- Number of AI initiatives launched

None of these touches the real question: Did EBITDA move?

Because actual ROI measurement requires:

- Setting a baseline before you deploy

- Isolating AI’s impact from market conditions

- Comparing against a control (a department without AI)

- Tracking for 12+ weeks to get signal

That’s work. Most companies skip it. And 6 months later, when PE asks “what’s the ROI?”, they have no answer.

The Three Ways To Kill Your Credibility with PE Owners

Gap #1: Measuring Activity, Not Impact

What you’re probably tracking: “AI tool usage is up 60%. We’re winning.”

What PE actually cares about: “Did this reduce headcount needs? Did it speed up deal closing? Did it improve margins?”

Example: Your sales team uses an AI lead-scoring tool. They log in every day. Adoption looks perfect. But are they closing deals faster? Are they closing bigger deals? If the answer is “I don’t know,” you don’t have an ROI story. You have a tool that feels useful.

The fix: Stop measuring logins. Start measuring outcomes tied to EBITDA.

- Time saved per task

- Revenue per deal (for revenue-facing initiatives)

- Cost per unit (for operational initiatives)

- Headcount reduction (the cleanest metric for PE)

Gap #2: You Can’t Separate AI’s Impact from Market Luck

Your PortCo implemented an AI pricing optimizer. Revenue is up 12%.

Question for PE: How much of that was AI vs. the market growing 15% across your industry?

If you can’t answer that, PE assumes it’s all market luck, and AI gets zero credit. Real attribution requires:

- A baseline: What were the metrics before AI?

- A control group: A department or region without AI, showing how they performed

- Market adjustment: Did the market grow? By how much?

Example: If revenue without AI (control group) grew 8%, and revenue with AI (deployment group) grew 12%, then AI contributed +4 percentage points. That’s measurable. That’s credible.

Before you deploy AI, identify a control group. It can be:

- A different PortCo in the same sector (if you have sister companies)

- A different department in your company

- A region without the AI initiative

- Your own pre-AI baseline vs. post-AI

Gap #3: You’re Confusing Decentralized Pilots with Scaled Impact

You’ve launched AI in sales, operations, and finance. Each costs $150K–$250K. Each shows some promise. But you forgot to consider this the trap:

You’re running three separate pilots with three separate measurement frameworks and no shared playbook.

You can’t tell PE, “here’s the model we’ll use to expand to other departments,” because you don’t have a model. You have three experiments. Pick ONE AI initiative. Measure it ruthlessly. Prove it works. Document the exact process. Then replicate that process in the next department.

One win, proven and documented, is worth more to PE than three maybes.

The Medical Device Company That Lost PE Trust

A PE-backed medical device company launched an AI-powered product recommendation engine. The thesis was solid: better recommendations = higher AOV and customer lifetime value. Six months in, the CEO reported to the PE firm: “AI is driving 15% revenue uplift.”

The PE firm’s CAIO asked: “Compared to what baseline? And how do you know it’s AI and not market conditions?”

The CEO couldn’t answer. When the PE firm hired third-party auditors (Clarkston Consulting) to review the initiative, they discovered:

- No control group (no way to know what revenue would have been without AI)

- No pre-AI baseline (they didn’t document starting metrics)

- Market grew 18% that quarter (so the 15% uplift was actually a miss)

Result: The PE firm discounted the initiative’s contribution to zero. The CEO lost credibility. The next AI budget got cut in half.

The lesson: PE owners don’t trust gut feel. They trust math. If you can’t show the math, they assume you’re either incompetent or lying.

The Framework PE Owners Actually Trust: 6 Months to Credible ROI

Here’s the playbook that will get you funded and trusted:

What to Tell Your PE Owners (And How to Say It)

Your PE firm’s CAIO will ask three questions. Here are the answers they want:

Question 1: “How much did EBITDA improve?”

Answer: “AI contributed +X bps to EBITDA margin. Here’s the calculation: baseline margin was Y%, post-AI margin is Z%, adjusted for market conditions.”

Not: “Adoption is up 40%.” Not: “We’re saving time.” The answer is a number tied to EBITDA.

Question 2: “How do you know it was AI and not market luck?”

Answer: “We compared the performance of the AI-deployed team to a control group in the same company. Market adjusted for sector growth. Here’s the data.”

Not: “We think it was AI.” Not: “Everyone says it’s faster.” The answer is a comparison.

Question 3: “Can we do this again in another department?”

Answer: “Yes. Here’s the documented process. We’ve already proven it works in [Department A]. We can replicate it in [Department B] using the same framework.”

Not: “Probably.” Not: “We’ll figure it out.” The answer is a playbook, not a guess.)

If you can answer all three questions with data, PE will fund the next initiative. If you can’t, they’ll assume you’re wasting money. It’s as simple as that.

The Real Truth

You’re not being asked to become data scientists. You’re being asked to think like PE owners.

PE owners care about one thing: Did this PortCo’s valuation expand at exit because of AI?

That happens through two distinct levers:

Lever #1: EBITDA Expansion Your AI cut costs or drove revenue. EBITDA went up. Margins improved. The company is worth more because it’s more profitable.

Lever #2: Multiple Expansion Your AI de-risked the business (reduced churn, improved retention, made revenue predictable). EBITDA might stay flat, but the company is worth more because buyers pay higher multiples for lower-risk businesses.

Either lever (or both) creates exit value. If you can prove it—with a baseline, a control group, market adjustment, and clear attribution—you get:

- Budget for the next initiative

- Credibility with your PE owners

- A playbook that scales

- Job security in a portfolio company

If you can’t prove it, PE assumes you’re wasting money. And wasted money means lower exit multiples. And lower exit multiples mean your PE owners made a bad investment. And bad investments don’t get funded for growth.

Start measuring now. Not adoption. Impact. The companies that do this get funded. The companies that don’t get less budget in the next round…

Guy Pistone CEO, Valere | Building AI measurement that PE owners actually trust

P.S. If you’re a VP of Ops or CAIO at a PE-backed PortCo and your PE owners are asking “where’s the AI ROI?” but you don’t have a framework to answer, let’s talk. I’m offering operators at PE-backed companies a quick diagnostic on whether your AI initiatives can be measured credibly for PE exit value. https://bit.ly/AIStrategy2026

Resources

PE + AI Strategy & ROI Measurement:

- Bain & Company: Private Equity in the AI Era – AI adoption and operationalization across PE portfolios ($3.2T AUM study, 2024)

- FTI Consulting: PE Portfolio AI Operating Models – Hub-and-spoke vs. decentralized scaling (2024)

- McKinsey & Company: How PE Firms Are Creating Value with AI (2024)

AI Implementation & Pilot Failure Rates:

- MIT NANDA Initiative: Generative AI Pilot Performance Study – 95% of enterprise AI pilots failing to drive profitability (2025)

- Stanford AI Index Report – Enterprise AI Investment vs. ROI Outcomes (2024)

PE-Specific AI Challenges & Risk:

- Citizens Financial: PE Market Report on AI Adoption and Risk Concerns – PE retreat from 2024 AI investment levels (2025)

- Clarkston Consulting: Ensuring AI Due Diligence for a Private Equity Firm – Medical device company with PE acquisition (Real case: ECG AI platform risk assessment, 2025)

Attribution & Measurement Frameworks:

- Withum Private Equity Survey – AI Strategy and KPI Measurement – 36% of companies lacking basic KPIs (2024)